前言

最近在研究OpenStack和Kubernetes融合相关的方法,通过查阅[1]发现openstack与k8s融合无外乎两种方法:一是K8S部署在OpenStack平台之上,二是K8S和OpenStack组件集成。方案一的优点是k8s容器借用虚机的多租户具有很好的隔离性,但缺点也很明显K8S使用的是在虚机网络之上又嵌套的一层overlay网络,k8s的网络将难以精细化的管控。方案二将k8s与openstack的部分组件进行集成,是的K8S与openstack具备很好的融合性,从而实现1+1>2的效果。openstack中的magnum是一个典型的解决方案,通过magnum可以镜像容器编排,同时可以快速部署一个Swarm、K8S、Mesos集群。

本文仅介绍如何在openstack中安装部署magnum以及安装中遇到的问题。

此外,我还想吐槽一句,OpenStack社区从Pike版本开始弃用了auth_uri以及keystone的35357端口全部改为公开的5000端口和使用www_authenticate_uri来进行keystone认证,社区的核心组件已经更换完成,但是非核心组件并未完全跟进,如Magnum-api、Heat安装时就出现过这类问题。

一、Magnum安装准备

根据官方组件说明[2],必须的组件有:

- Keystone-Identity service

- Glance-Image service

- Nova-Compute service

- Neutron-Networking service

- Heat-Orchestration service。

可选组件有:

- Cinder-Block Storage service

- Octavia-Load Balancer as a Service (LBaaS v2)

- Barbican-Key Manager service

以及 - Swift-Object Storage service

- Ironic-Bare Metal service

本次安装使用的Rocky版本,Neutron网络架构采用的是ovs+vxlan的方式

二、安装Magnum

我的openstack环境采用纯手工的方式按照官方指导文档进行安装,同样Magnum也是Manual的方式安装,系统版本为Ubuntu-18.04 LTS,因为Rocky版本的deb包只有18.04才有。Magnum-api 版本为7.0.1-0ubuntu1~cloud0。

按照指导手册[3],进行安装,安装和配置方式极其简单,但是官方的配置文档没有来的及更新,以及deb包更新不及时遇到了很多奇奇怪怪的bug,在查找原因时也很难确定关键词而无法搜索到正确的答案。

按照官方文档在安装完Magnum后通过openstack coe service list来查看是否安装成功,如果该命令执行失败通过查看/var/log/magnum/magnum-api.log来排查问题。但是,该命令执行成功也不代表这Magnum的服务能够正常使用,后续使用中主要查看的是Magnum调度的日志/var/log/magnum/magnum-conductor.log。

2.1 magnum-api 报错

Magnum API报错 ,如下所示,根据[4],新的稳定版代码已修复但是安装的deb包并不是最新的代码

File “/usr/lib/python2.7/dist-packages/magnum/common/keystone.py”, line 47, in auth_url

return CONF[ksconf.CFG_LEGACY_GROUP].auth_uri.replace(‘v2.0’, ‘v3’)AttributeError: ‘NoneType’ object has no attribute ‘replace’

解决方法如下如Add support for www_authentication_uri所示,添加如下patch

1 | vim /usr/lib/python2.7/dist-packages/magnum/common/keystone.py +47 |

修改完成后需要重启magnum服务,否则修改的代码不生效。

1 | service magnum-* restart |

2.2 指定swarm集群的volumed size

按照文档创建一个集群模板时出现如下报错:

1 | root@ctl01:/home/xdnsadmin# openstack coe cluster template create swarm-cluster-template --image fedora-atomic-latest --external-network Provider --dns-nameserver 223.5.5.5 --master-flavorm1.small --flavor m1.small --coe swarm |

解决办法是指定一个volume size,按照官方的说法没有cinder服务也可以使用magnum,这个我并未验证,我的集群安装的cinder服务。

1 | openstack coe cluster template create swarm-cluster-template --image fedora-atomic-latest --external-network Provider --dns-nameserver 223.5.5.5 --master-flavor m1.small --flavor m1.small --coe swarm --docker-volume-size 10 |

同时这里仍有一个问题,查看2.5

2.2 docker volume_type

在安装过程出现无效的volumetype的错误,按照[5]需要设置volumetype同时进行相关配置

ERROR heat.engine.resource raise exception.ResourceFailure(message, self, action=self.action)

ERROR heat.engine.resource ResourceFailure: resources[0]: Property error: resources.docker_volume.properties.volume_type: Error validating value ‘’: The VolumeType () could not be found.

1)Check if you have any volume types defined.

1 | openstack volume type list |

2)If there are none, create one:

1 | openstack volume type create volType1 --description "Fix for Magnum" --public |

3)Then in /etc/magnum/magnum.conf add this line in the [cinder] section:

1 | default_docker_volume_type = volType1 |

2.3 Barbican服务未安装

按照文档在选择[certificates]时使用推荐的barbican来存储认证证书,而我的openstack集群并未安装barbican服务,因此收到了如下报错,但是我当时并未发现原因所在,我一直以为是keystone的publicURL endpoint无法访问。

2019-04-09 18:42:26.505 32428 ERROR magnum.conductor.handlers.common.cert_manager [req-ffd4b077-80ee-4846-bdc0-84817aa699a5 - - - - -] Failed to generate certificates for Cluster: 03cd9a72-c980-4947-b273-d9bcb88a6913: AuthorizationFailure: unexpected keystone client error occurred: publicURL endpoint for key-manager service not found

……….

2019-04-09 18:42:26.505 32428 ERROR magnum.conductor.handlers.common.cert_manager connection = get_admin_clients().barbican()

2019-04-09 18:42:26.505 32428 ERROR magnum.conductor.handlers.common.cert_manager File “/usr/lib/python2.7/dist-packages/magnum/common/exception.py”, line 65, in wrapped

2019-04-09 18:42:26.505 32428 ERROR magnum.conductor.handlers.common.cert_manager % sys.exc_info()[1])

2019-04-09 18:42:26.505 32428 ERROR magnum.conductor.handlers.common.cert_manager AuthorizationFailure: unexpected keystone client error occurred: publicURL endpoint for key-manager service not found

该问题的解决方法很简单,安装barbican 服务,或者使用x509keypair或local方式存储证书

1 | [certificates] |

若使用local方式,还需创建目录/var/lib/magnum/certificates/,并将其所属组改为magnum,sudo chown -R magnum:magnum /var/lib/magnum/certificates/,否则会出现如下报错:

2019-04-10 15:40:50.675 20235 ERROR magnum.conductor.handlers.common.cert_manager [req-c31e7588-a486-4ea8-8adc-16ff8f37b284 - - - - -] Failed to generate certificates for Cluster: e579 0f96-e8c5-49c5-82f9-87faf8438585: CertificateStorageException: Could not store certificate: [Errno 2] No such file or directory: ‘/var/lib/magnum/certificates/5e8beb02-9378-4e8c-8b4d-1 cae933de665.crt’

同时,在修复此问题的过程中查看到一个警告,该警告对于使用过程也造成了很大的影响Auth plugin and its options for service user must be provided in [keystone_auth] section. Using values from [keystone_authtoken] section is deprecated.: MissingRequiredOptions: Auth plugin requires parameters which were not given: auth_url

需要在/etc/magnum/magnum.conf中 添加keystone_auth项 ,此外,这里的还涉及到接下来的问题2.4

1 | [keystone_auth] |

2.4 swarm集群创建超时

在处理完以上的magnum conductor问题后,开始创建swarm集群,但是出现创建超时(超时时长为60分钟)集群的stack启动失败的问题。原因是按照之前的官方文档创建所有服务的endpoint都是使用http://ctl01:xxx而此处创建的swarm集群需要与opensatck相关服务进行通信,无法识别ctl01域名,解析不到服务地址IP,因此需要将所有的endpiont服务的public地址都是用公开的IP替换。

ERROR oslo_messaging.rpc.server [req-4a8e8e2d-cae0-4113-a49d-57bb91c03b8d - - - - -] Exception during message handling: EndpointNotFound: http://ctl01:9511/v1 endpoint for identity service not found

….

ERROR oslo_messaging.rpc.server File “/usr/lib/python2.7/dist-packages/magnum/conductor/handlers/cluster_conductor.py”, line 68, in cluster_create

ERROR oslo_messaging.rpc.server cluster_driver.create_cluster(context, cluster, create_timeout)

….

ERROR oslo_messaging.rpc.server File “/usr/lib/python2.7/dist-packages/keystoneauth1/access/service_catalog.py”, line 464, in endpoint_data_for

ERROR oslo_messaging.rpc.server raise exceptions.EndpointNotFound(msg)

ERROR oslo_messaging.rpc.server EndpointNotFound: http://ctl01:9511/v1 endpoint for identity service not found

使用命令openstack endpoint list | grep public查看相关的endpoint。

2.5 swarm-cluster启动失败

在解决完2.4的问题后又迎来了新的问题,swarm-cluster集群启动创建成功,但是服务报错。这是能够通过floating ip登录swarm master节点使用fedora用户以及密钥mykey登录。查看cloud init日志/var/log/cloud-init-output.log,会发现如下的报错:

/var/lib/cloud/instance/scripts/part-006: line 13: /etc/etcd/etcd.conf: No such file or directory

/var/lib/cloud/instance/scripts/part-006: line 26: /etc/etcd/etcd.conf: No such file or directory

/var/lib/cloud/instance/scripts/part-006: line 38: /etc/etcd/etcd.conf: No such file or directory

etcd初始失败从而导致swarm-manager启动失败,这个问题还是相当的严重的,因为很难排查原因,最终在opensatck的讨论邮件列表中找到的答案[6],根本原因是coe使用错误,应该使用swarm-mode而不是安装手册里写的swam,这点实在是太坑人了。

$openstack coe cluster template show docker-swarm

| docker_storage_driver | devicemapper |

| network_driver | docker |

| coe | swarm-mode |Never got the “swarm” driver to work, you should use “swarm-mode” instead which uses native Docker clustering without etcd.

同时需要注意的是,使用的Fedora镜像必须是Fedora-Atomic-27-xx,Frdora 27之后的镜像改变较大,cloud init脚本无法正常工作。还需添加必要的patch swarm-mode allow TCP port 2377 to swarm master node,修改magnum/drivers/swarm_fedora_atomic_v2/templates/swarmcluster.yaml的Line 247,添加一下内容。

1 | - protocol: tcp |

随后,

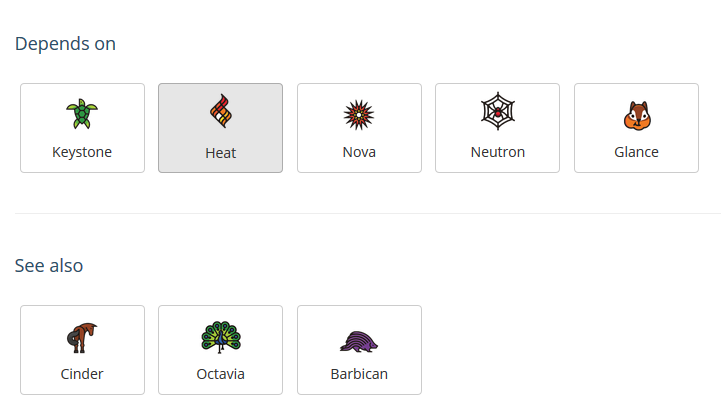

1)重新创建swarm-cluster模板

1 | root@ctl01:/home/xdnsadmin/workplace/magnum/swarm/cluster# openstack coe cluster template create swarm-cluster-template --image fedora-atomic-latest --external-network Provider --dns-nameserver 223.5.5.5 --master-flavor m1.small --flavor m1.small --coe swarm-mode --docker-volume-size 10 |

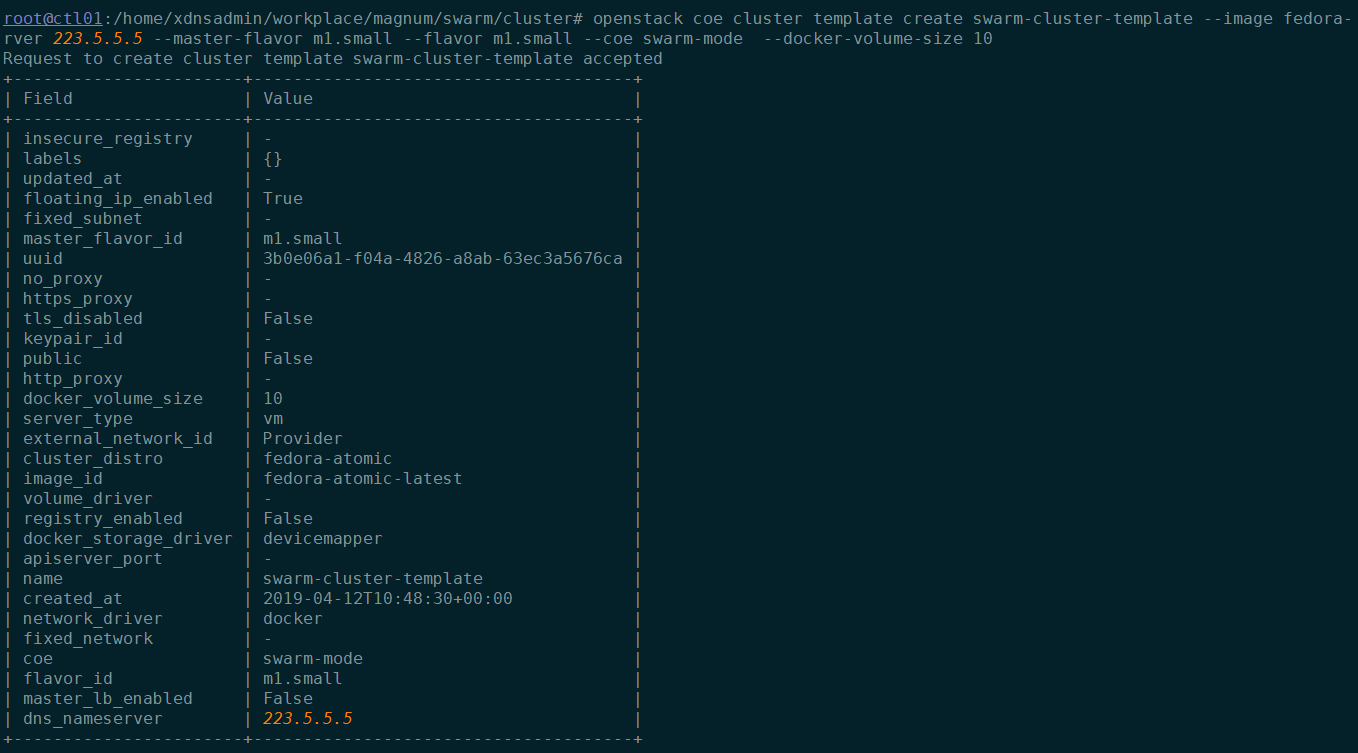

2)启动swarm-cluster实例

1 | root@ctl01:/home/xdnsadmin/workplace/magnum/swarm/cluster# openstack coe cluster create swarm-cluster --cluster-template swarm-cluster-template --master-count 1 --node-count 1 --keypair mykey |

等待集群实例创建完成

3)配置swarm-cluster访问

首先,生成证书,然后导入环境变量即可访问swarm集群(控制节点需要安装docker客户端)

1 | root@ctl01:/home/xdnsadmin/workplace/magnum/swarm/cluster# $(openstack coe cluster config swarm-cluster --dir /home/xdnsadmin/workplace/magnum/swarm/cluster) |

2.6 standard_init_linux.go:178: exec user process caused “permission denied”

该错误与swarm节点的存储格式有关,由于之前解决swarm无法启动而被带偏添加了--docker-storage-driver overlay参数在swarm-cluster模板中,导致容器无法启动,通过查看[7]得知与其支持的存储驱动有关

1 | root@ctl01:/home/xdnsadmin/workplace/magnum/swarm/cluster# docker -H 10.253.0.111:2375 run busybox echo "Hello from Docker!" |

目前Docker支持如下几种storage driver:

| Technology | Storage driver name |

|---|---|

| OverlayFS | overlay |

| AUFS | aufs |

| Btrfs | btrfs |

| Device Mapper | devicemapper |

| VFS | vfs |

| ZFS | zfs |

详细的对比可以参看:Docker之几种storage-driver比较

以上,就是Openstack Rocky版本Magnum安装与排坑的过程,不说啦,刚刚安装完Rocky版本,Stein版本就放出来了,我接着去踩雷啦 - -!开源真香

【参考链接】

1)如何把OpenStack和Kubernetes结合在一起来构建容器云平台

2)Magnum -Container Orchestration Engine Provisioning

4)magnum-api not working with www_authenticate_uri

5)magnum cluster create k8s cluster Error: ResourceFailure

6)Fwd: openstack queens magnum error

7)standard_init_linux.go:175: exec user process caused “permission denied”