一、前言

一年前研究过一段时间的K8S,最开始看k8s的概念一头雾水,随着学习的深入有一些了解,跟着cloudman的教程尝试搭建k8s集群,但是当时由于墙的原因搭建一个k8s集群颇为费劲,在做完实验对k8s有一个了解后就没有继续深入。最近由于新的项目需要重新研究k8s环境,正好做一下笔记,记录搭建的过程,在搭建过程中一个好消息就是k8s的docker容器在国内有镜像提供商,像阿里云、dockerhub上都有,简直是一大救星。由于有一定的知识了解,本文不再介绍k8s的基本概念和相关的命令含义。

搭建过程主要参考的是molscar的使用kubeadm 部署 Kubernetes(国内环境)。

二、安装环境准备

k8s集群的安装,至少需要一个master节点和一个slave节点,节点配置如下

- 操作系统要求

- Ubuntu 16.04+

- Debian 9

- CentOS 7

- RHEL 7

- Fedora 25/26 (best-effort)

- HypriotOS v1.0.1+

- Container Linux (tested with 1800.6.0)

- 2+ GB RAM

- 2+ CPUs

- 特定的端口开放(安全组和防火墙未将其排除在外)

- 关闭Swap交换分区

本文搭建时使用的系统是ubuntu 16.04.5,一个master节点4个slave节点,安装的是当前k8s最新的v1.13版本。

2.1 docker 安装

1)docker软件安装

master和slave节点都需要安装docker

我的网络在教育网访问更快,这里使用清华源来进行安装

安装依赖:

1 | sudo apt-get install apt-transport-https ca-certificates curl gnupg2 software-properties-common |

信任 Docker 的 GPG 公钥:

1 | curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add - |

对于 amd64 架构的计算机,添加软件仓库:

1 | sudo add-apt-repository \ |

最后安装

1 | sudo apt-get update |

安装完成后通过命令查看

1 | xdnsadmin@k8smaster:~$ docker --version |

2)免sudo执行docker命令

为了使普通用户免sudo使用docker命令

将当前用户加入 docker 组:

1 | sudo usermod -aG docker $USER |

3)镜像加速

对于使用 systemd 的系统,请在 /etc/docker/daemon.json 中写入如下内容(如果文件不存在请新建该文件)

这里使用Docker 官方加速器 ,也可以使用阿里云或者中科大的加速

1 | { |

重新启动服务

1 | sudo systemctl daemon-reload |

4)禁用 swap

该步骤只需要在master节点执行即可

对于禁用

swap内存,具体原因可以查看Github上的Issue:Kubelet/Kubernetes should work with Swap Enabled

临时关闭方式:

1 | sudo swapoff -a |

永久关闭方式:

编辑/etc/fstab文件,注释掉引用swap的行

1 | <file system> <mount point> <type> <options> <dump> <pass> |

随后重启机器

测试:输入top 命令,若 KiB Swap一行中 total 显示 0 则关闭成功

2.2 安装 kubeadm, kubelet 以及 kubectl

master和slave节点都需要安装三个软件

1)k8s加速安装配置

这里可以选择使用清华源或中科大源加速安装

首先添加软件源认证

1 | apt-get update && apt-get install -y apt-transport-https curl |

随后添加中科大源仓库地址并安装软件

1 | cat <<EOF > /etc/apt/sources.list.d/kubernetes.list |

2.3 k8s集群安装

1)cgroups配置

在Master节点中配置 cgroup driver

查看 Docker 使用 cgroup driver:

1 | docker info | grep -i cgroup |

而 kubelet 使用的 cgroupfs 为system,不一致故有如下修正:

1 | sudo vim /etc/systemd/system/kubelet.service.d/10-kubeadm.conf |

加上如下配置:

1 | Environment="KUBELET_CGROUP_ARGS=--cgroup-driver=cgroupfs" |

或者

1 | Environment="KUBELET_CGROUP_ARGS=--cgroup-driver=cgroupfs --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.1" |

重启 kubelet

1 | systemctl daemon-reload |

2)镜像配置

这里使用kubeadm来进行自动化集群安装和配置,在安装过程中会从google仓库中下载镜像,由于墙的原因镜像下载会失败,这里预选将相应的镜像下载下来再重新改tag

Master 和 Slave 启动的核心服务分别如下:

| Master 节点 | Slave 节点 |

|---|---|

| etcd-master | Control plane(如:calico,fannel) |

| kube-apiserver | kube-proxy |

| kube-controller-manager | other apps |

| kube-dns | |

| Control plane(如:calico,fannel) | |

| kube-proxy | |

| kube-scheduler |

使用如下命令:

1 | kubeadm config images list |

获取当前版本kubeadm 启动需要的镜像,示例如下:

1 | xdnsadmin@k8smaster:~$ kubeadm config images list |

从dockerhub的mirrorgooglecontainers仓库下载相应镜像,如

1 | xdnsadmin@k8smaster:~$ docker pull mirrorgooglecontainers/kube-apiserver:v1.13.3 |

这里分享一个脚本来自动完成镜像下载并改tag的操作

1 | xdnsadmin@k8smaster:~$ cat k8s_images_get.sh |

3)检查端口占用

Master 节点

| Protocol | Direction | Port Range | Purpose | Used By |

|---|---|---|---|---|

| TCP | Inbound | 6443* | Kubernetes API server | All |

| TCP | Inbound | 2379-2380 | etcd server client API | kube-apiserver, etcd |

| TCP | Inbound | 10250 | Kubelet API | Self, Control plane |

| TCP | Inbound | 10251 | kube-scheduler | Self |

| TCP | Inbound | 10252 | kube-controller-manager | Self |

Worker节点

| Protocol | Direction | Port Range | Purpose | Used By |

|---|---|---|---|---|

| TCP | Inbound | 10250 | Kubelet API | Self, Control plane |

| TCP | Inbound | 30000-32767 | NodePort Services** | All |

4)初始化 kubeadm

在初始化过程中需要coredns的支持,同样需要预先下载,随着安装的k8s版本不同所需的coredns版本也会相应改变,可以先执行kubeadm init查看报错

1 | xdnsadmin@k8smaster:~$ docker pull docker pull coredns/coredns:1.2.6 |

集群master节点初始化:

1 | xdnsadmin@k8smaster:~$ sudo kubeadm init --apiserver-advertise-address=<your ip> --pod-network-cidr=10.244.0.0/16 |

init 常用主要参数:

- –kubernetes-version: 指定Kubenetes版本,如果不指定该参数,会从google网站下载最新的版本信息。

- –pod-network-cidr: 指定pod网络的IP地址范围,它的值取决于你在下一步选择的哪个网络网络插件,这里选用flannel网络因此指定

--pod-network-cidr=10.244.0.0/16,需要注意的该CIDR不可修改。 - –apiserver-advertise-address: 指定master服务发布的Ip地址,如果不指定,则会自动检测网络接口,通常是内网IP。

- –feature-gates=CoreDNS: 是否使用CoreDNS,值为true/false,CoreDNS插件在1.10中提升到了Beta阶段,最终会成为Kubernetes的缺省选项。

1 | mkdir -p $HOME/.kube |

master节点测试:

1 | curl https://127.0.0.1:6443 -k 或者 curl https://<master-ip>:6443 -k |

回应如下:

1 | { |

重置初始化

1 | xdnsadmin@k8smaster:~$ kubeadm reset |

忘记了token,可以通过命令查看

1 | xdnsadmin@k8smaster:~$ kubeadm token list |

5)安装pod插件

插件可选类型有很多,参考官方pod网络插件,这里选用flannel网络,网络选择在后续可以修改

1 | xdnsadmin@k8smaster:~$ kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/a70459be0084506e4ec919aa1c114638878db11b/Documentation/kube-flannel.yml |

6)节点加入

在slave节点执行一下命令即可自动加入集群

1 | kubeadm join --token <token> <master-ip>:<master-port> --discovery-token-ca-cert-hash sha256:<hash> |

查看节点加入情况

1 | xdnsadmin@k8smaster:~$ kubectl get nodes |

若有节点未加入查看相关节点的镜像是否下载成功。

三、dashboard安装

3.1 dashboard安装

k8s集群安装后可以安装dashboard面板便于查看

1)预备镜像

1 | k8s.gcr.io/kubernetes-dashboard-amd64:v1.10.1 |

同样的方式先从dockerhub上下载镜像再改tag

安装dashboard,不同版本配置模板的链接会有所修改,以官方的安装链接为准

1 | xdnsadmin@k8smaster:~$ kubectl create -f https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml |

安装过程中可能出现dashboard重新下载而无法连接gcr.k8s.io的情况,则将部署配置文件下载下来手动修改镜像名称

1 | xdnsadmin@k8smaster:~$ wget https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml |

2)配置访问端口

将type: ClusterIP改成type: NodePort

1 | xdnsadmin@k8smaster:~$ kubectl -n kube-system edit service kubernetes-dashboard |

查询dashboard状态

1 | xdnsadmin@k8smaster:~/workplace/k8s/dashboard$ kubectl -n kube-system get service kubernetes-dashboard |

可以看到k8s将集群内部的10.97.229.208:443端口映射到31090端口,通过浏览器访问https://<Mater IP>:31090

3)配置admin

创建kubernetes-dashboard-admin.yaml并填入以下内容

1 |

|

之后:kubectl create -f kubernetes-dashboard-admin.yml

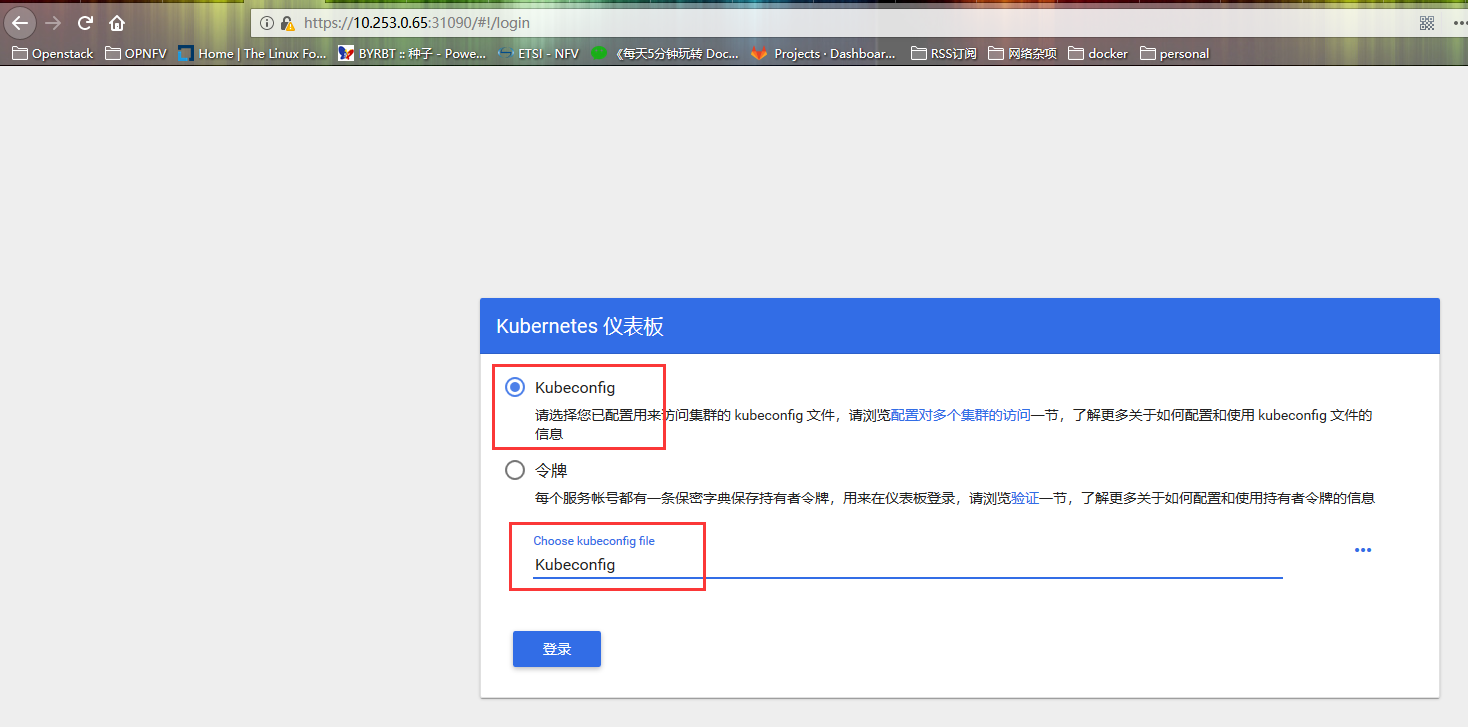

4) 登录方式

Kubeconfig登录

创建 admin 用户

file: admin-role.yaml

1 | kind: ClusterRoleBinding |

随后kubectl create -f admin-role.yaml

1 | xdnsadmin@k8smaster:~/workplace/k8s/dashboard$ kubectl -n kube-system get secret|grep admin-token |

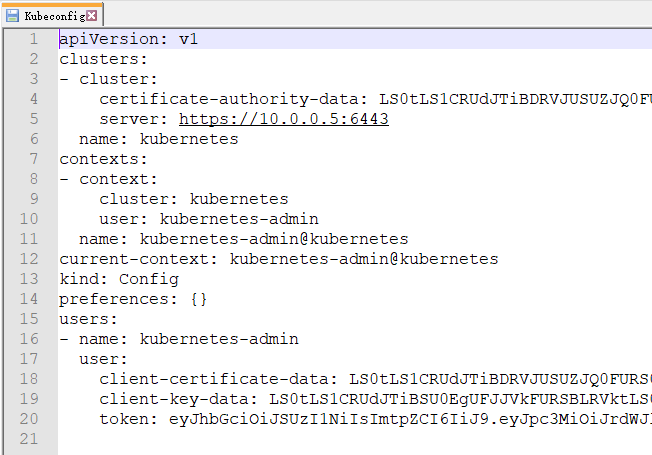

设置 Kubeconfig文件

1 | copy ~/.kube/config Kubeconfig |

内容如下:

Token登录

获取token:

1 | xdnsadmin@k8smaster:~/workplace/k8s/dashboard$ kubectl -n kube-system describe $(kubectl -n kube-system get secret -n kube-system -o name | grep namespace) | grep token |

获取admin-token:

1 | xdnsadmin@k8smaster:~/workplace/k8s/dashboard$ kubectl -n kube-system describe secret/$(kubectl -n kube-system get secret | grep kubernetes-dashboard-admin | awk '{print $1}') | grep token |

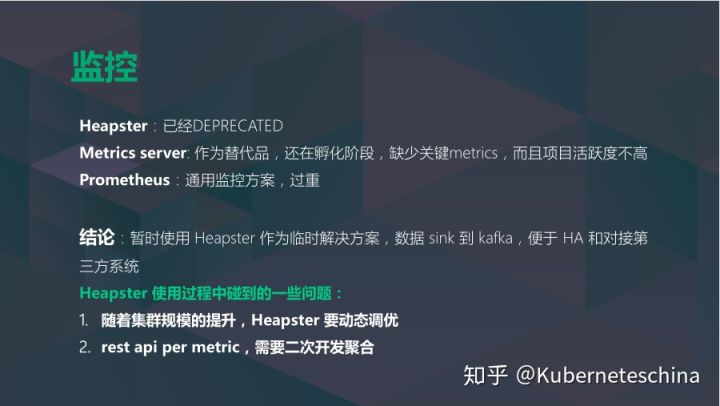

3.2 集成 heapster监控

当前heapster已经被废弃,但是还有维护,这里也仍然可以使用。

1)安装 heapster

1 | mkdir heapster |

之后修改 heapster.yaml

1 | --source=kubernetes:https://10.0.0.1:6443 --------改成自己的ip |

或者

1 | --source=kubernetes.summary_api:https://kubernetes.default.svc?inClusterConfig=false&kubeletHttps=true&kubeletPort=10250&insecure=true&auth= |

修改镜像为mirrorgooglecontainers

1 | sed -i "s/k8s.gcr.io/mirrorgooglecontainers/" *.yaml |

添加heapster api访问权限,修改heapster-rbac.yaml文件

1 | xdnsadmin@k8smaster:~/workplace/k8s/heapster$ cat heapster-rbac.yaml |

随后部署应用

1 | kubectl create -f . |

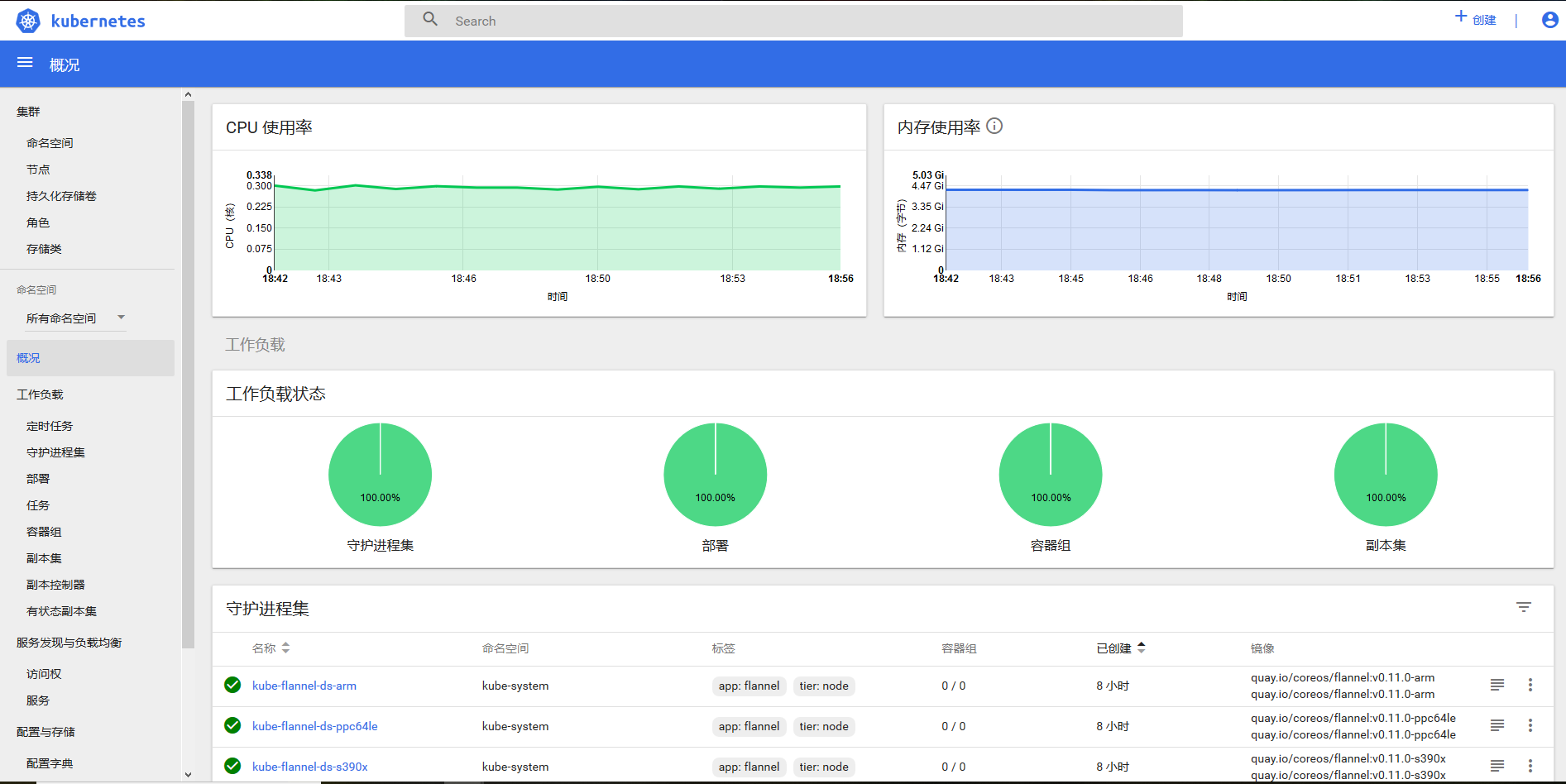

效果如下:

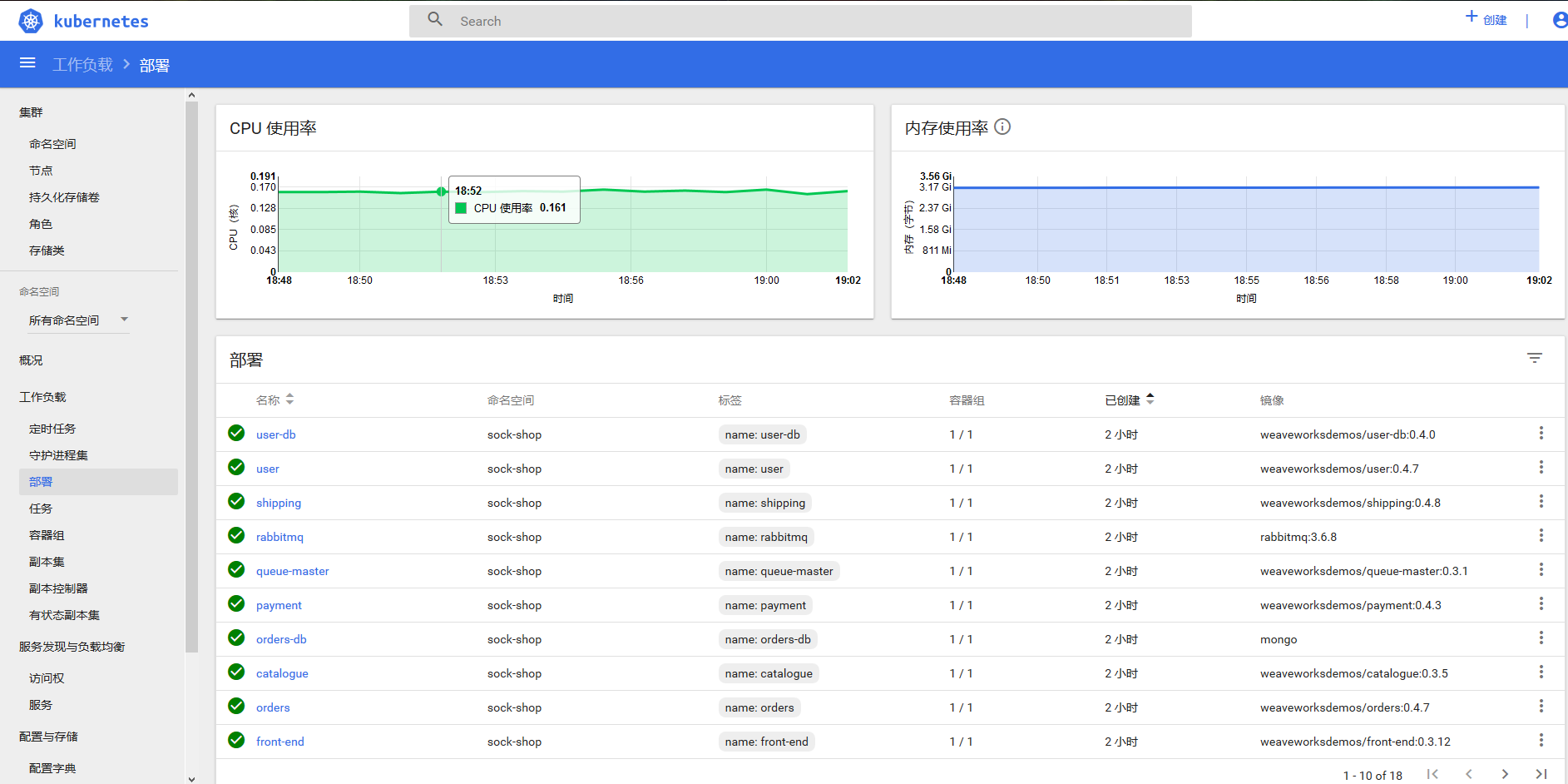

四、测试应用部署

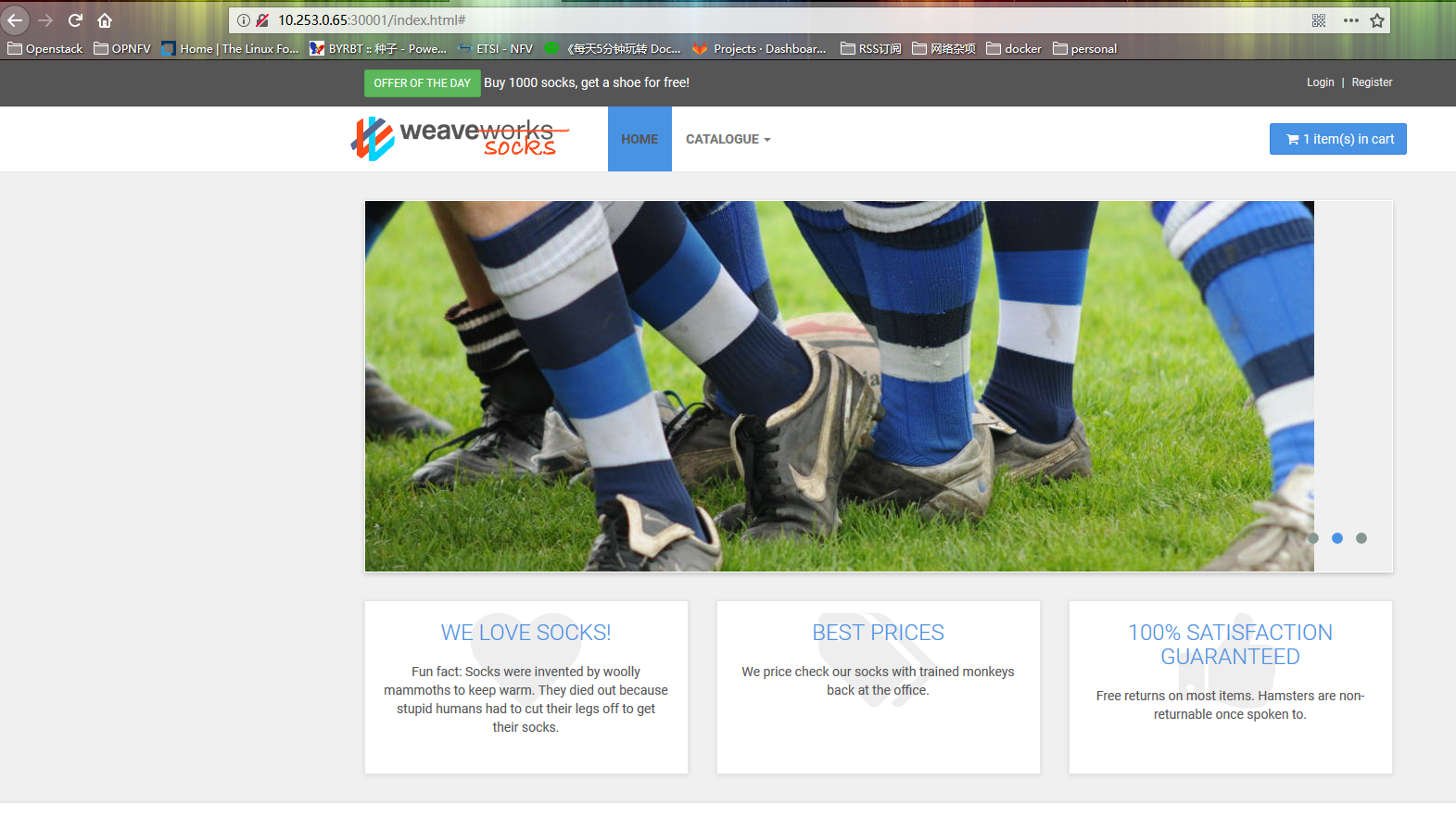

选用Sock Shop进行应用部署测试,演示一个袜子商城,以微服务的形式部署起来

1 | kubectl create namespace sock-shop |

等待 Pod 变为 Running 状态,便安装成功

1 | xdnsadmin@k8smaster:~/workplace/k8s/heapster$ kubectl -n sock-shop get deployment |

官方已将其端口做了映射,不需要修改即可直接访问。如果未做端口映射手动修改即可

1 | xdnsadmin@k8smaster:~$ kubectl -n sock-shop edit svc front-end |

如果加购物车功能够跑通,就说明集群搭建成功

卸载 socks shop: kubectl delete namespace sock-shop